We introduce EgoPoints, a benchmark for point tracking in egocentric videos. We annotate 4.7K challenging tracks in egocentric sequences, including 9x more points that go out-of-view and 59x more points that require re-identification (ReID) after returning to view, compared to the popular TAP-Vid-DAVIS evaluation benchmark. To measure the performance of models on such challenging points, we introduce evaluation metrics that particularly monitor tracking on points in-view, out-of-view, and those that require re-identification.

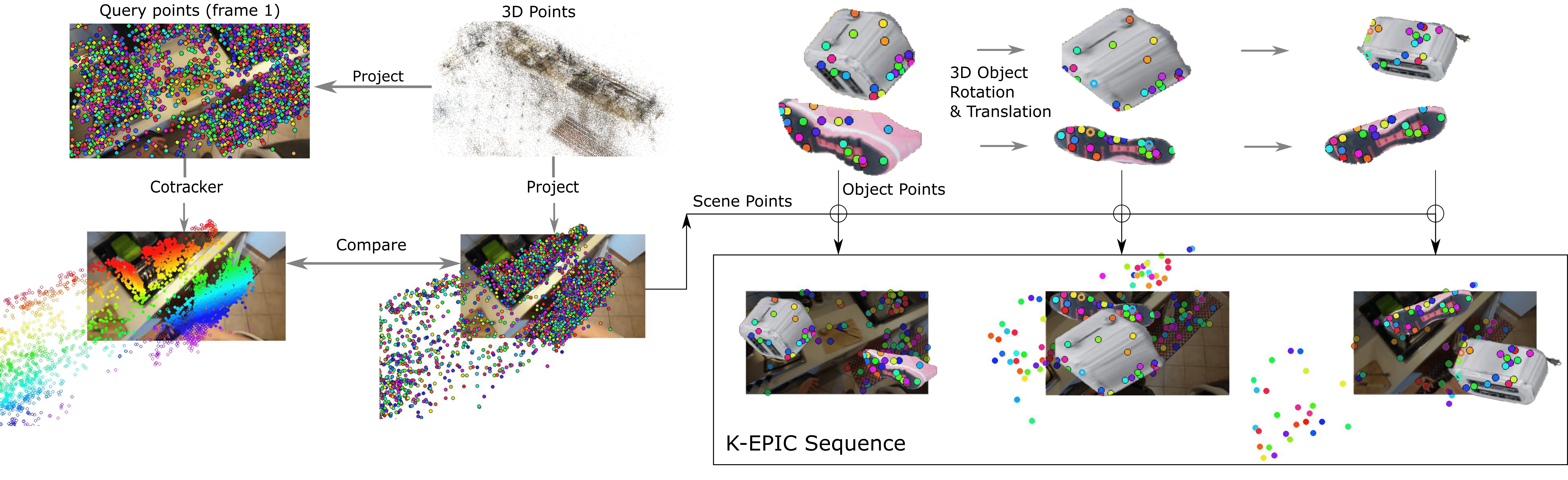

We then propose a pipeline to create semi-real sequences, with automatic ground truth. We generate 11K sequences by combining dynamic Kubric objects with scene points from EPIC Fields. When fine-tuning state-of-the-art methods on these sequences and evaluating on our annotated EgoPoints sequences, we improve CoTracker across all metrics including the tracking accuracy δ★avg by 2.7 percentage points and accuracy on ReID sequences (ReIDδavg) by 2.4 points. We also improve δ★avg and ReIDδavg of PIPs++ by 0.3 and 2.2 respectively.

K-EPIC - Training data

K-EPIC - Training data

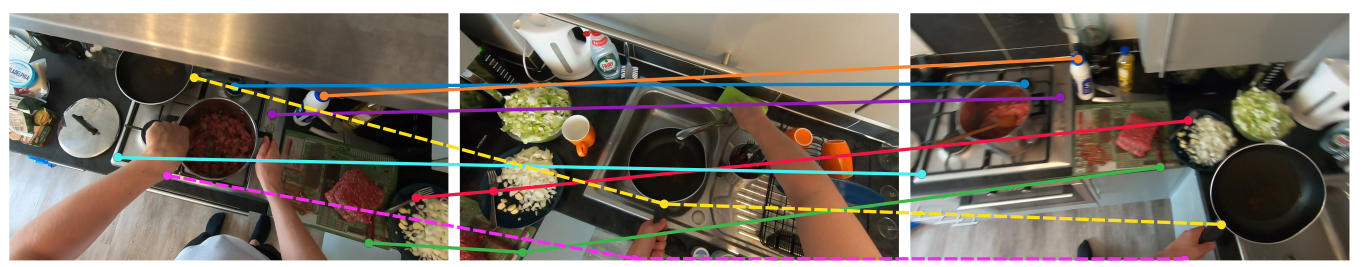

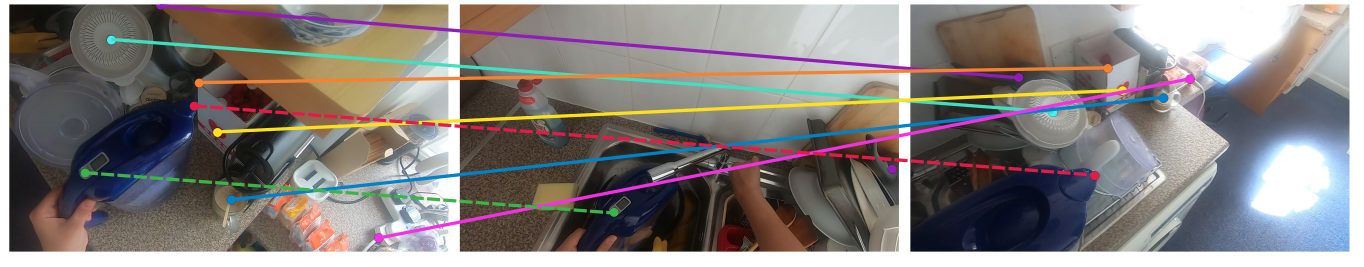

EgoPoints - Evaluation data

EgoPoints - Evaluation data